Reconfigurable speaker arrays for Spatial Hearing testing

Principal Investigator: T.E. von Wiegand

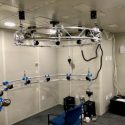

We have developed reconfigurable speaker arrays and ancillary electronic equipment for use in experimentation and clinical testing. One such example is a pair of suspended arrays on two radii installed in a sound booth at a BCH clinic.

[Work supported by contract with Boston Children’s Hospital.]

Head-worn Microphone Array R & D projects

Principal Investigator: Various

Microphone array systems in multiple configurations (4×4, 2x4x2) were designed and built utilizing digital MEMS microphones. All configurations were designed to be head-worn for use in spatial hearing and attention experiments. The benefits of this architecture include resistance to picking up electronic noise (hum, buzz, power line) and closer matching of individual mic elements. The I2S data from 16 PCM microphones are combined into a single datastream that is available to the host via a USB streaming audio device class (UAC2.0) bridge.

[This work was supported by grants and contracts from NIH, WPAFB, & BU.]

In-Line Hearing Aid Processor For Radio Communication

Principal Investigator: T.E. von Wiegand

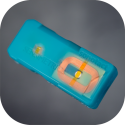

Users of communications radios that have significant hearing loss can be accommodated using an in-line adapter programmed with their custom profile. A DSP hearing aid processor was designed to be connected between a Harris Falcon PRC152 radio and a Peltor 300 ohm ANC headset, and to derive stable and quiet power from the U-329 connector. The device is programmed using a GUI interface and multiple hearing loss profiles can be saved into the adapter for pushbutton recall as needed.

[Work supported by contract with Air Force Research Laboratory.]

Compact Loudspeaker Array for Spatial Hearing Tests

Principal Investigator: P.M. Zurek

This project addresses the need for a standardized spatial-hearing test system that can be fit easily into existing audiometric sound booths. An eight-loudspeaker array has been designed and developed and is being tested for inter-booth reliability at two tests sites. (Loudspeakers from Orb Audio.)

[Work supported through a contract with the U.S. Army Medical Research and Development Command.]

Binaural and Spatial Hearing

Principal Investigator: P.M. Zurek

This area of work encompasses basic research into questions of how people use their two ears to localize sounds and to understand speech in noisy and reverberant environments. Of special interest is the study of phenomena related to the precedence effect in sound localization.

[This work is being done in collaboration Dr. Richard Freyman of the University of Massachusetts and is supported by a grant from NIH/NIDCD.]

Military Hearing Preservation (MHP) Training Kit

Principal Investigator: P.M. Zurek

It is important for noise-exposed individuals to understand what can happen if hearing protection is not used. The goal of this project is to develop efficient and effective tools for conveying the importance of hearing protection to military personnel. The MHP system allows a hearing healthcare professional to custom tailor computer-based informational presentations to the expected hearing losses and interests of target audiences. Presentations are assembled from a large collection of modules that include hearing loss simulations and testimonials from hearing-impaired service members. MHP includes a complete hearing loss and tinnitus simulator as well as a listening library that allows the noise-exposed worker to select sounds of most interest.

[This work is being done in collaboration with Dr. Lynne Marshall of the Naval Submarine Medical Research laboratory and is supported by the Office of Naval Research.]

A Wireless Self-Contained Tactile Aid

Principal Investigator: P.M. Zurek

This work is aimed at the development of a tactile aid for the deaf contained entirely in a single small unit that can be worn on an arm like a wristwatch. Two models of such a tactile aid will eventually be developed. The first, which is the primary motivation for this effort, is a unit that provides speech-based tactile stimulation for infants and young children during the time period when they are awaiting a cochlear implant. This unit is a small vibration-display device designed with special attention given to the safety and usage requirements for this population. A second model will be an adult unit that will be slightly larger than the children’s unit and will contain additional wireless receiver functionality for displaying alarms signaling remote events (e.g., doorbell, smoke alarm) and for delivering speech sensed by a remote microphone.

[Work supported by a grant from NIH/NIDCD.]

Software for Auditory Prostheses

Principal Investigator: R.L. Goldsworthy

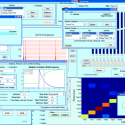

This project is for the development of software with research and clinical applications in the hearing sciences. In the research area, the software will allow investigators to work at a higher level of abstraction, providing monitoring and control applications for the design of complex sound processing strategies for hearing devices. The clinical applications are designed to improve communications between audiologists, speech-language therapists, and hearing-impaired individuals through the use of auditory training and assessment activities that are accessible from any web-connected device.

[Work supported by a grant from NIH/NIDCD.]

Conversation Assistant for Noisy Environments

Principal Investigator: T.E. von Wiegand

The Conversation Assistant for Noisy Environments (CANE) is aimed at developing new devices to assist multi-way face-to-face conversation in noisy environments. The development of these devices is motivated by the common problem of noise interference experienced by hearing aid and cochlear implant users, as well as by the unaided in noisy settings such as restaurants. Current wireless assistive listening devices for noisy settings usually require manual channel selection for choosing among multiple talkers. Such systems do not provide assistance to more than one person in a group, nor do they allow the user to listen easily and selectively to a particular talker in a group. CANE devices make use of a novel combination of signal modulation and transmission techniques to achieve multi-way speech pick-up, amplification and delivery by earphone for each person in a group. Each talker’s speech is picked up with either a lanyard- or head-mounted microphone and transmitted to all members of the group. Through the unique design of the sensor, augmented by head pointing when desired, each group member using a receiver is able to further highlight the talker to be attended to. CANE technology will be used in the development of products for persons, hearing aid users or not, who need listening assistance in noise. In addition, CANE devices can be readily integrated into protective headsets to enable natural and private face-to-face communication in high-noise workplaces.

[Work supported by a grant from NIH/NIDCD].

Functional Hearing Evaluation for Military Occupational Specialities

Principal Investigator: J.G. Desloge

Sensimetrics provides software and hardware support for research conducted at the Audiology and Speech Center at the Walter Reed National Military Medical Center in Bethesda, MD. This effort spans the following activities. In all cases, Sensimetrics designed the systems (hardware and software) and continues to provide support on an as-needed basis. This work is supported by the supported by the U.S. Army Medical Research and Materiel Command under Contract No. W81XWH-13-C-0194

HLSim Real-Time, Wearable Hearing-loss Simulator Units

These helmet-mounted devices enable the wearer to experience the ambient sound environment through a simulated hearing impairment. The simulation ranges from ‘no loss’ to threshold shifts in excess of 90 dB relative to normal hearing. Further, the system incorporates a ‘blast sensor’, which enables it to detect loud sounds and to simulate temporary threshold shift in response to these loud sounds. These units are used to assess soldier battle effectiveness in the presence of both pre-existing and battle-induced hearing impairment.

AIMS Real-time Intelligibility Disruption System

This system combines wireless gaming headsets equipped with a boom microphone with custom software that allows up to eight users to communicate with each other in real time with all transmitted speech between users processed for intelligibility disruption. The disruption is fully controllable via either software on the host PC or through controls on each headset, and intelligibility can range from completely-intelligible to completely-unintelligible. This system is used to gain insight into the question of just how intelligible speech must be in order for military personnel to function effectively at their task.

SyncAVPlayer Matlab-Callable Video Playback with Precise Control of AV Synchronization

This system allows a user to present video from within the Matlab environment. The video may be seamlessly integrated into the Matlab figures, and full control (play, pause, seek, etc.) is provided. Additionally, the user may play the video back with substituted audio that has been processed within Matlab. Finally, this system may be calibrated to the specific system hardware (i.e., the audio device, the graphics card, and the video monitor) in order to ensure that the audio and video streams are synchronized to within 1 msec (which is much tighter than the typical 30-70 msec synchronization evident in most off-the-shelf players such as QuickTime or VLC).

SyncAVCapture System for Generating AV-Synchronized Video Recordings

This system allows videos to be recorded in such a manner that the audio and video streams are synchronized to within 1 msec. This is tighter than the traditional ‘clapper’ based approach, in which the AV are synchronized to within one video frame (15-30 msec). The system consists of custom hardware designed to replace the clapper that is used to synchronize the audio and video at the start of the recording process as well as software used to post-process the AV files in order to adjust the audio and video timestamps to achieve synchronization.

Video-Camera-Mounted Binarual Recording System with AGC Compensation

This system is a head-sized, binaural-recording system designed to piggy-back on existing video cameras so that a realistic binaural-sound track may be capture to complement the video. Further, the system is designed so that the recorded audio can be processed to remove effects of the automatic-gain-control (AGC) of the camera (which is a must-have feature when recording environments where sound levels span a large dynamic range). This means that the resulting recordings are level-true throughout the duration of the recording.

Matlab Toolbox for Bluetooth Android Tablet Communication and Control

This toolbox allows Android tablets to be connected to a Matlab server running on an experimental computer so that the tables can serve as experimental stimulus-response devices.

[This work is supported by the U.S. Army Medical Research and Materiel Command under Contract No. W81XWH-13-C-0194. The views, opinions and/or findings contained in this report are those of the author(s) and should not be construed as an official Department of the Army position, policy or decision unless so designated by other documentation.]